DeepSeek R1: China’s ‘Hold my beer’ AI moment?

Written by Zoi Roupakia

The release of DeepSeek R1, the latest large language model (LLM) developed by a Chinese hedge-fund-backed AI team, is making waves for its efficiency, architecture, and open-source accessibility. Unlike most models that rely on brute-force computing power, DeepSeek adopts a far more strategic approach to AI processing.

Many LLMs operate like builders who use every tool for every task — effective, but expensive and inefficient. DeepSeek, by contrast, functions more like a team of specialised experts: only the most relevant “builders” are activated for each job while others remain idle.

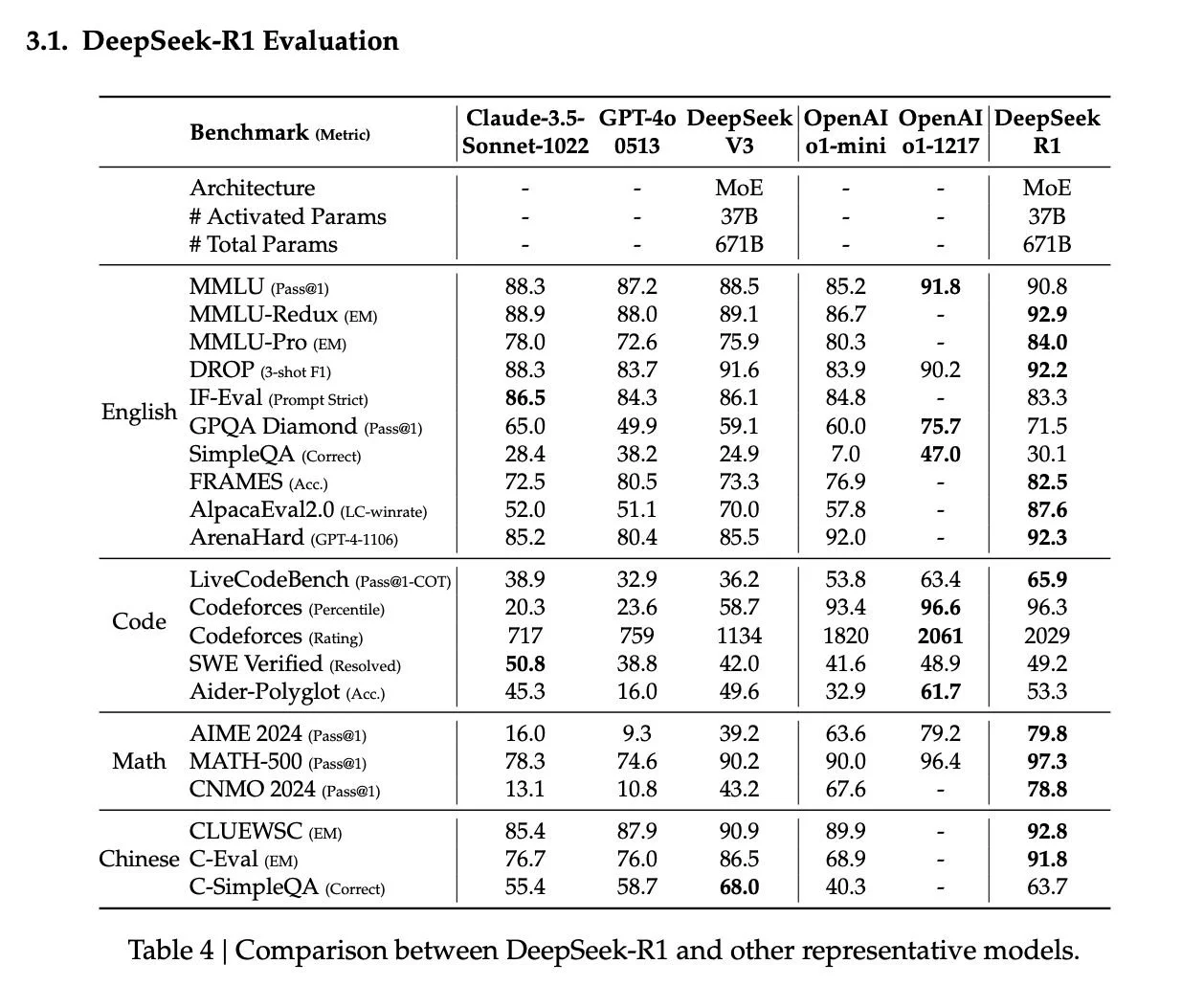

At the core of this design is a Mixture-of-Experts (MoE) architecture, where roughly 37 billion of 671 billion parameters are active at any given time. This allows the model to specialise in different domains while running at a fraction of the computational cost.

What makes DeepSeek different

Efficiency: Only about 6% of parameters activate per task, dramatically lowering costs.

FP8 Precision training: Reduces memory use and computational demands.

128K Token context window: Improves long-context handling.

Open source: Offers researchers and startups a viable alternative to proprietary LLMs.

Source: DeepSeek AI (2025), DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning.

Why this matters beyond AI researchers

DeepSeek represents more than a technical milestone — it signals a strategic moment in China’s innovation trajectory.

China’s AI Strategy is accelerating: Despite U.S. chip restrictions, DeepSeek proves that constraints can fuel innovation rather than hinder it.

Policy backing: The Bank of China’s RMB-trillion “AI Industry Chain Action Plan” aims to boost national self-reliance, strengthen AI infrastructure, and support key sectors such as robotics, biomanufacturing, advanced materials, and the low-altitude economy (drones, urban air mobility).

Market implications: AI pricing models may shift. If open-source models like DeepSeek can match proprietary AI, Big Tech may need to rethink access and monetisation strategies.

The broader geopolitical questions

Does DeepSeek signal a shift in AI leadership away from the West?

Will U.S. technology restrictions slow China down—or accelerate domestic innovation?

Could this spur a Western policy response through tighter national-security frameworks?

Will open-source AI ultimately undermine Big Tech’s dominance?

DeepSeek R1 is not just a technological breakthrough; it is a geopolitical statement. It shows that efficiency and accessibility can compete with sheer compute power, raising serious questions about who will dominate the next era of AI innovation.

Source: DeepSeek AI (2025), DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning.